Automate Posting Hugo Blog to Social Sites (with a db)

Background

In the previous few posts I detailed my progress in automating a site. I am going about this by using an rss scraper to post new posts to social.

I had initally thought about doing this really naively, but I want a database. It doesn't feel right without using one. I am somewhat upset with myself, because I am basically just recreating wordpress... but so it goes.

Previous posts in this series

Expand a previous script

In a previous post I wrote about how to scan an rss feed on my personal site. In this post I will expand upon that to update some tables in a mysql database that I generated in this post

Add support for mysql

MySQL python connector manual

- https://dev.mysql.com/doc/connector-python/en/connector-python-introduction.html

Instal connector with pip

I created a virtual enviornment prior to starting this exercise. Review the virtualenv documentation for more information. The source below is my path to thevirtualenviornment's bin.

I am install the connector and the xdev extensions. TBH I do not know what the extensions are but i'm just going to go ahead and install them now before i write a script that ends up needing those extra libs.

&& &&

Install python-dotenv with pip

I plan to containerize this later. Using .env files formt he start will be a good way of making this portable later.

Create a .env file with your environmental variables

DB_USER=cobra

DB_PASSWORD=password

DB_HOST=127.0.0.1

DB_NAME=posts

Create a utility class to be able to reuse this code

connector method reference

https://dev.mysql.com/doc/connector-python/en/connector-python-api-mysqlconnection.html

Something like below is a good way to get started.

=

=

=

=

= None

=

# Usage example

# Load environment variables from .env file

=

Verify that your server is running

run..on most *nix-ish systems

Run your test program

Note do not call your class mysql.py... it will overwride the library and fail to import.

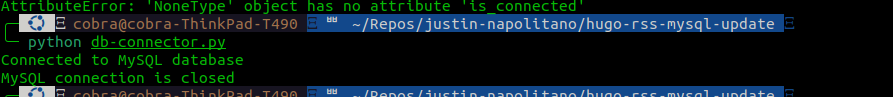

Output

The output should look somethign like this...